Conduct and Interpret a Logistic Regression

What is Logistic Regression?

Logistic regression is the linear regression analysis to conduct when the dependent variable is dichotomous (binary). Like all linear regressions the logistic regression is a predictive analysis. Logistic regression is used to describe data and to explain the relationship between one dependent binary variable and one or more continuous-level (interval or ratio scale) independent variables.

Standard linear regression requires the dependent variable to be of continuous-level (interval or ratio) scale. How can we apply the same principle to a dichotomous (0/1) variable? Logistic regression assumes that the dependent variable is a stochastic event. For instance, if we analyze a pesticides kill rate, the outcome event is either killed or alive. Since even the most resistant bug can only be either of these two states, logistic regression thinks in likelihoods of the bug getting killed. If the likelihood of killing the bug is greater than 0.5 it is assumed dead, if it is less than 0.5 it is assumed alive.

It is quite common to run a regular linear regression analysis with dummy independent variables. A dummy variable is a binary variable that is treated as if it would be continuous. Practically speaking, a dummy variable increases the intercept thereby creating a second parallel line above or below the estimated regression line.

Discover How We Assist to Edit Your Dissertation Chapters

Aligning theoretical framework, gathering articles, synthesizing gaps, articulating a clear methodology and data plan, and writing about the theoretical and practical implications of your research are part of our comprehensive dissertation editing services.

- Bring dissertation editing expertise to chapters 1-5 in timely manner.

- Track all changes, then work with you to bring about scholarly writing.

- Ongoing support to address committee feedback, reducing revisions.

Alternatively, we could try to just create a multiple linear regression with a dummy dependent variable. This approach, however, has two major shortcomings. Firstly, it can lead to probabilities outside of the (0,1) interval, and secondly residuals will all have the same variance (think of parallel lines in the zpred*zresid plot).

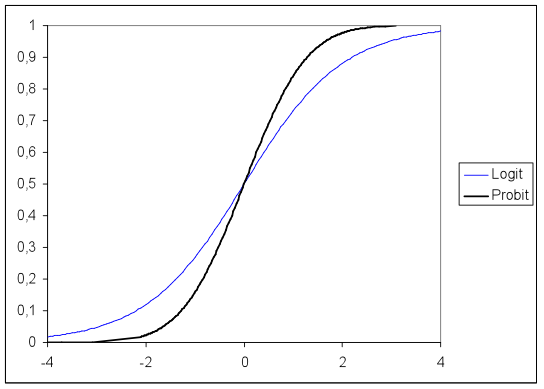

To solve these shortcomings we can use a logistic function to restrict the probability values to (0,1). The logistic function is p(x) = 1/1+exp(-x). Technically this can be resolved to ln(p/(1-p))= a + b*x. ln(p/(1-p)) is also called the log odds. Sometimes instead of a logit model for logistic regression, a probit model is used. The following graph shows the difference for a logit and a probit model for different values [-4,4]. Both models are commonly used in logistic regression; in most cases a model is fitted with both functions and the function with the better fit is chosen. However, probit assumes normal distribution of the probability of the event, when logit assumes the log distribution. Thus the difference between logit and probit is usually only visible in small samples.

At the center of the logistic regression analysis lies the task of estimating the log odds of an event. Mathematically, logistic regression estimates a multiple linear regression function defined as

Logistic regression is similar to the Discriminant Analysis. Discriminant analysis uses the regression line to split a sample in two groups along the levels of the dependent variable. Whereas the logistic regression analysis uses the concept of probabilities and log odds with cut-off probability 0.5, the discriminant analysis cuts the geometrical plane that is represented by the scatter cloud. The practical difference is in the assumptions of both tests. If the data is multivariate normal, homoscedasticity is present in variance and covariance and the independent variables are linearly related. Discriminant analysis is then used because it is more statistically powerful and efficient. Discriminant analysis is typically more accurate than logistic regression in terms of predictive classification of the dependent variable.

The Logistic Regression in SPSS

In terms of logistic regression, let us consider the following example:

A research study is conducted on 107 pupils. These pupils have been measured with five different aptitude tests—one for each important category (reading, writing, understanding, summarizing etc.). How do these aptitude tests predict if the pupils pass the year end exam?

First we need to check that all cells in our model are populated. Since we don’t have any categorical variables in our design we will skip this step.

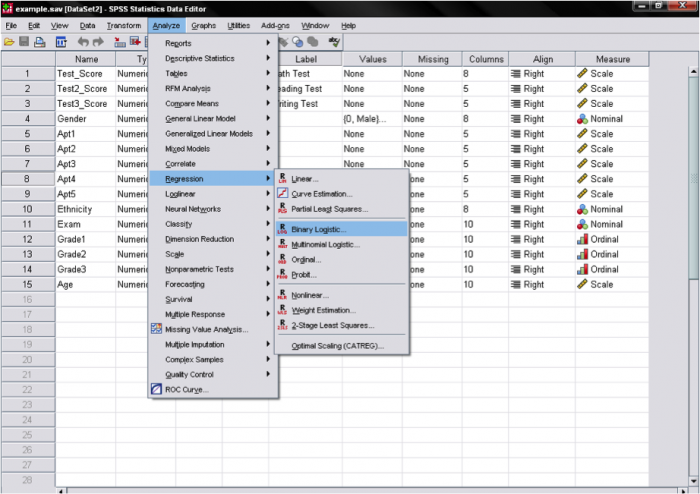

Logistic Regression is found in SPSS under Analyze/Regression/Binary Logistic…

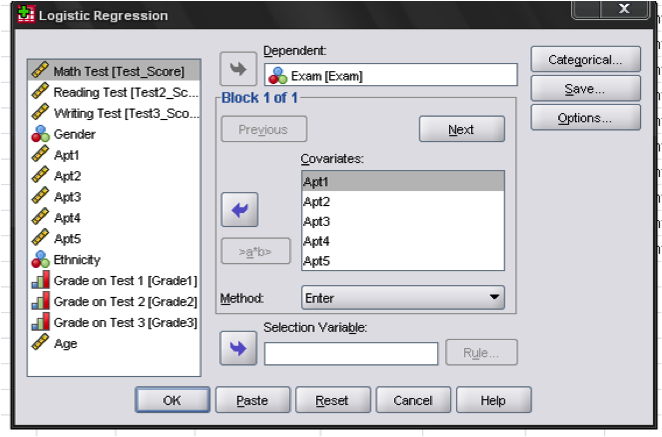

This opens the dialog box to specify the model. Here we need to enter the nominal variable Exam (pass = 1, fail = 0) into the dependent variable box and we enter all aptitude tests as the first block of covariates in the model.

The menu Categorical… allows to specify contrasts for categorical variables (which we do not have in our logistic regression model), and Options… offers several additional statistics, which don’t need.